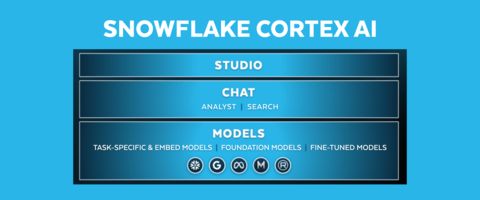

Snowflake Cortex AI delivers easy, efficient, and trusted enterprise AI to thousands of organizations — making it simple to create custom chat experiences, fine-tune best-in-class models, and expedite no-code AI development

Snowflake (NYSE: SNOW), the AI Data Cloud company, today announced at its annual user conference, Snowflake Summit 2024, new innovations and enhancements to Snowflake Cortex AI that unlock the next wave of enterprise AI for customers with easy, efficient, and trusted ways to create AI-powered applications. This includes new chat experiences, which empower organizations to develop chatbots within minutes so they can talk directly to their enterprise data and get the answers they need, faster. In addition, Snowflake is further democratizing how any user can customize AI for specific industry use cases through a new no-code interactive interface, access to industry-leading large language models (LLMs), and serverless fine-tunings. Snowflake is also accelerating the path for operationalizing models with an integrated experience for machine learning (ML) through Snowflake ML, enabling developers to build, discover, and govern models and features across the ML lifecycle. Snowflake’s unified platform for generative AI and ML allows every part of the business to extract more value from their data, while enabling full security, governance, and control to deliver responsible, trusted AI at scale.

This press release features multimedia. View the full release here: https://www.businesswire.com/news/home/20240604216985/en/

Snowflake Cortex AI delivers easy, efficient, and trusted enterprise AI to thousands of organizations — making it simple to create custom chat experiences, fine-tune best-in-class models, and expedite no-code AI development (Graphic: Business Wire)

“Snowflake is at the epicenter of enterprise AI, putting easy, efficient, and trusted AI in the hands of every user so they can solve their most complex business challenges, without compromising on security or governance,” said Baris Gultekin, Head of AI, Snowflake. “Our latest advancements to Snowflake Cortex AI remove the barriers to entry so all organizations can harness AI to build powerful AI applications at scale and unlock unique differentiation with their enterprise data in the AI Data Cloud.”

Give Your Entire Enterprise the Power to Talk to Data Through New Chat Experiences

LLM-powered chatbots are a powerful way for any user to ask questions from their enterprise data using natural language, unlocking the insights organizations need for critical decision-making with increased speed and efficiency. Snowflake is unveiling two new chat capabilities, Snowflake Cortex Analyst (public preview soon) and Snowflake Cortex Search (public preview soon), allowing users to develop these chatbots in a matter of minutes against their structured and unstructured data, without operational complexity. Cortex Analyst, built with Meta’s Llama 3 and Mistral Large models, allows businesses to securely build applications on top of their analytical data in Snowflake. In addition, Cortex Search harnesses state-of-the-art retrieval and ranking technology from Neeva (acquired by Snowflake in May 2023) alongside Snowflake Arctic embed, so users can build applications against documents and other text-based datasets through enterprise-grade hybrid search — a combination of both vector and text — as a service.

“Data security and governance are of the utmost importance for Zoom when leveraging AI for our enterprise analytics. We rely on the Snowflake AI Data Cloud to support our internal business functions and develop customer insights as we continue to democratize AI across our organization,” said Awinash Sinha, Corporate CIO, Zoom. “By combining the power of Snowflake Cortex AI and Streamlit, we’ve been able to quickly build apps leveraging pre-trained large language models in just a few days. This is empowering our teams to use AI to quickly and easily access helpful answers.”

“Although businesses typically use dashboards to consume information from their data for strategic decision-making, this approach has some drawbacks including information overload, limited flexibility, and time-consuming development,” said Mukesh Dubey, Product Owner Data Platform, CH NA, Bayer. “What if internal functional users could ask specific questions directly on their enterprise data and get responses back with basic visualizations? The core of this capability is high-quality responses to a natural language query on structured data, used in an operationally sustainable way. This is exactly what Snowlake Cortex Analyst enables for us. What I’m most excited about is we're just getting started, and we're looking forward to unlocking more value with Snowflake Cortex AI."

Data security is key to building production-grade AI applications and chat experiences, and then scaling them across enterprises. As a result, Snowflake is unveiling Snowflake Cortex Guard (generally available soon), which leverages Meta’s Llama Guard, an LLM-based input-output safeguard that filters and flags harmful content across organizational data and assets, such as violence and hate, self-harm, or criminal activities. With Cortex Guard, Snowflake is further unlocking trusted AI for enterprises, helping customers ensure that available models are safe and usable.

Snowflake Strengthens AI Experiences to Accelerate Productivity

In addition to enabling the easy development of custom chat experiences, Snowflake is providing customers with pre-built AI-powered experiences, which are fueled by Snowflake’s world-class models. With Document AI (generally available soon), users can easily extract content like invoice amounts or contract terms from documents using Snowflake’s industry-leading multimodal LLM, Snowflake Arctic-TILT, which outperforms GPT-4 and secured a top score in the DocVQA benchmark test — the standard for visual document question answering. Organizations including Northern Trust harness Document AI to intelligently process documents at scale to lower operational overhead with higher efficiency. Snowflake is also advancing its breakthrough text-to-SQL assistant, Snowflake Copilot (generally available soon), which combines the strengths of Mistral Large with Snowflake’s proprietary SQL generation model to accelerate productivity for every SQL user.

Unlock No-Code AI Development with the New Snowflake AI & ML Studio

Snowflake Cortex AI provides customers with a robust set of state-of-the-art models from leading providers including Google, Meta, Mistral AI, and Reka, in addition to Snowflake’s top-tier open source LLM Snowflake Arctic, to accelerate AI development. Snowflake is further democratizing how any user can bring these powerful models to their enterprise data with the new Snowflake AI & ML Studio (private preview), a no-code interactive interface for teams to get started with AI development and productize their AI applications faster. In addition, users can easily test and evaluate these models to find the best and most cost-effective fit for their specific use cases, ultimately accelerating the path to production while optimizing operating costs.

To help organizations further enhance LLM performance and deliver more personalized experiences, Snowflake is introducing Cortex Fine-Tuning (now public preview), accessible through AI & ML Studio or a simple SQL function. This serverless customization is available for a subset of Meta and Mistral AI models. These fine-tuned models can then be easily used through a Cortex AI function, with access managed using Snowflake role-based access controls.

Streamline Model and Feature Management with Unified, Governed MLOps Through Snowflake ML

Once ML models and LLMs are developed, most organizations struggle with continuously operating them in production on evolving data sets. Snowflake ML brings MLOps capabilities to the AI Data Cloud, so teams can seamlessly discover, manage, and govern their features, models, and metadata across the entire ML lifecycle — from data pre-processing to model management. These centralized MLOps capabilities also integrate with the rest of Snowflake’s platform, including Snowflake Notebooks and Snowpark ML for a simple end-to-end experience.

Snowflake’s suite of MLOps capabilities include the Snowflake Model Registry (now generally available), which allows users to govern the access and use of all types of AI models so they can deliver more personalized experiences and cost-saving automations with trust and efficiency. In addition, Snowflake is announcing the Snowflake Feature Store (now public preview), an integrated solution for data scientists and ML engineers to create, store, manage, and serve consistent ML features for model training and inference, and ML Lineage (private preview), so teams can trace the usage of features, datasets, and models across the end-to-end ML lifecycle.

Continued Innovation at Snowflake Summit 2024

Snowflake also announced new innovations to its single, unified platform that provide thousands of organizations with increased flexibility and interoperability across their data; new tools that accelerate how developers build in the AI Data Cloud; a new collaboration with NVIDIA that customers and partners can harness to build customized AI data applications in Snowflake; the Polaris Catalog, a vendor-neutral, fully open catalog implementation for Apache Iceberg; and more at Snowflake Summit 2024.

Learn More:

- Read more about how AI and ML innovations enable users to talk to their data, security customize LLMs, and streamline model operations in this blog post.

- Stay on top of the latest news and announcements from Snowflake on LinkedIn and Twitter / X.

Forward Looking Statements

This press release contains express and implied forward-looking statements, including statements regarding (i) Snowflake’s business strategy, (ii) Snowflake’s products, services, and technology offerings, including those that are under development or not generally available, (iii) market growth, trends, and competitive considerations, and (iv) the integration, interoperability, and availability of Snowflake’s products with and on third-party platforms. These forward-looking statements are subject to a number of risks, uncertainties and assumptions, including those described under the heading “Risk Factors” and elsewhere in the Quarterly Reports on Form 10-Q and the Annual Reports on Form 10-K that Snowflake files with the Securities and Exchange Commission. In light of these risks, uncertainties, and assumptions, actual results could differ materially and adversely from those anticipated or implied in the forward-looking statements. As a result, you should not rely on any forward-looking statements as predictions of future events.

© 2024 Snowflake Inc. All rights reserved. Snowflake, the Snowflake logo, and all other Snowflake product, feature and service names mentioned herein are registered trademarks or trademarks of Snowflake Inc. in the United States and other countries. All other brand names or logos mentioned or used herein are for identification purposes only and may be the trademarks of their respective holder(s). Snowflake may not be associated with, or be sponsored or endorsed by, any such holder(s).

About Snowflake

Snowflake makes enterprise AI easy, efficient and trusted. Thousands of companies around the globe, including hundreds of the world’s largest, use Snowflake’s AI Data Cloud to share data, build applications, and power their business with AI. The era of enterprise AI is here. Learn more at snowflake.com (NYSE: SNOW).

View source version on businesswire.com: https://www.businesswire.com/news/home/20240604216985/en/

Contacts

Media Contacts:

Kaitlyn Hopkins

Senior Product PR Lead, Snowflake

press@snowflake.com